AI-related Vulnerabilities within CVEs: Are We Ready Yet? A Study of Vulnerability Disclosure in AI Products

17 Oct 2025· ,,,·

0 min read

,,,·

0 min read

Marcello Maugeri

Gianpietro Castiglione

Mario Raciti

Giampaolo Bella

Abstract

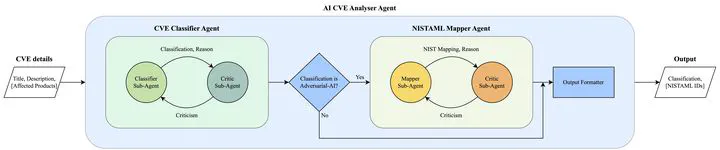

The integration of Artificial Intelligence (AI) models into software systems has heightened new risks. While the Common Vulnerabilities and Exposures (CVE) Program standardises software security issue disclosure, it remains unclear whether it is ready to capture AI-related vulnerabilities adequately. This paper presents a large-scale analysis of AI-related vulnerabilities disclosed in the CVE list from 2021 to 2025. We design a multi-agent system that (i) implements an actor-critic pattern to classify CVEs into three categories: Non-AI, AI Supply Chain, and Adversarial AI, and (ii) maps the Adversarial AI category to the newly published NIST AI 100-2e2025 taxonomy. Our analysis reveals that out of ~128, 000 CVEs, ~1.57% are identified as AI-relevant, with a significant portion (~1.05%) related to traditional vulnerabilities in the AI Supply Chain rather than direct Adversarial AI threats (~0.52%). The trend shows recent efforts (from 2024) in disclosing vulnerabilities with Adversarial AI implications. However, we stress that underrepresented categories from the NIST AI 100-2e202 taxonomy necessitate more coverage from the research community and more detailed reporting. Our findings underscore both the growth of AI-specific vulnerabilities and the limitations of current disclosure practices in capturing the adversarial threat landscape. We ultimately advise future investigation of the under-explored Adversarial AI categories, and integration of more detailed CVE write-ups and sources beyond public CVEs (with external references, e.g., the full advisory text, related CWE/CAPEC entries), by employing more advanced LLMs.

Type

Publication

AISec'25 (CCS 2025)